Testing tool for EiffelStudio 6.3

The 6.3 release of EiffelStudio will come with a new testing tool combining unit and system level testing. The tool is currently under development but is already capable of managing, creating and executing tests. At this point I would like to give a short overview on what has been implemented, how to tool is used and what functionality will be added in the upcoming weeks.

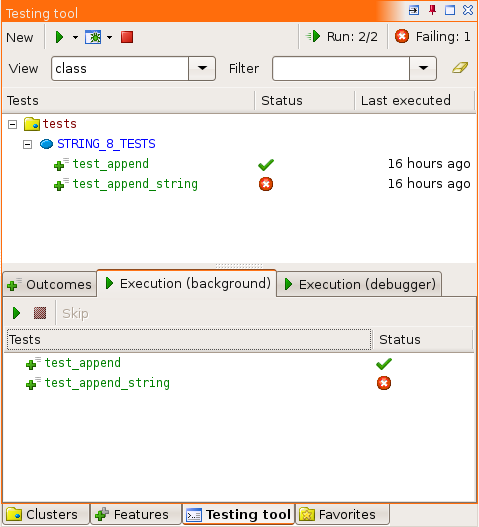

Lets start with a screen shot of the tool containing a number of tests.

Let's get started

Getting to this point in your own project will require a few preparation steps:

- In your project include the testing library which is found in $ISE_LIBRARY/testing/testing.ecf

- Add a tests cluster to you configuration. This can be done through the "Clusters" tool by adding a cluster and marking it as a test cluster. Alternatively you can directly edit your ecf file by adding the following to your target:

Note: Test clusters are really nothing else then normal clusters, except that EiffelStudio will specifically search for tests only in test clusters. Normal clusters can contain test cluster as sub clusters, however not the other way around.

A first test class

Now is a good time to compile the project, so the testing tool becomes aware of the testing library and the new test cluster. Although to have it show any tests, we need to add a first test class. Probably the fastest way to add a new test class is simply through the Clusters tool and modifying it by hand. If you don't feel that comfortable yet try out the test wizard by clicking on "New" in the testing tool. Important is just that the new class is located in the previously created tests cluster.

The following is an example test class. Important: test classes can contain multiple test routines, if they do not contain any test routines they will not appear in the testing tool (although the following requirements will be removed, the current testing tool only accepts classes having no creator list and all test routine names must start with "test_").

The indexing clause in the test routine are optional. The testing tool will use them as meta information to visualize existing tests in different ways. This informations are called tags and can be added by the user. In the above example covers/{STRING_8}.append indicates that this test targets feature `append' in {STRING_8}.Whether a test fails or passes depends on an exception being raised during the execution. The helper routine assert' will raise an exception corresponding to its input. Two other helper routines are setup' and `tear_down' which are called before, respectively after, executing each test routine.

Running tests

If the new class has not appeared in the testing tool yet, you need to recompile one more time. Once the tests appear you are able to execute them in two different ways. The button with the green arrow will execute tests in the background, only notifying you about the results. Next to that is a button with a bug, which will launch your tests in the debugger and stop at every entry point of each test routine. In both cases the testing tool will try to gather results and list them on the Outcomes tab (to see test results you must select a test in the upper grid). Note that one of the above tests is currently failing. If you haven't already seen the typo, try debugging the test case to see what is going on!

What is to come

The next step for the testing tool will be automatic test generation. This means integrating two projects which have been developed at ETH in the past years, namely Auto Test (http://se.ethz.ch/research/autotest) and CDD (http://dev.eiffel.com/CddBranch). The goal is to have - next to your manually written test cases - a number of automatically generated tests. Basically the testing tool will try to generate such tests whenever a failure occurs during an executing.

With this said you should be able to start writing tests for your own Eiffel projects. If you have any suggestions on how the tool can be improved, write me an email or post your ideas on http://dev.eiffel.com/Testing_Tool_(Specification)#Wish_list.